25

Jun

2019

Tracking how many times an Issue fails code review or testing

People aren't perfect and this is also true for software development teams. It is inevitable that software defects will crop up during software development, however, a robust practice will ensure that code reviews catch quirks in the code. Regression and acceptance testing should also be carried out at each step as they are designed to catch defects before and after being pushed to production. Another important part of a healthy team is tracking and reporting on defects to identify areas that need improvement and focus. This article focuses on reporting on the key metric of how often issues fail code review or testing.

Depending on how your teams are structured, you may want to view whether a certain development component is persistently associated with issues failing testing or whether code reviews in a particular part of the code consistently fails code-review. Adopting a dashboard that monitors and reports on these statistics is useful for identifying problems and allows teams to make improvements, minimise future failures and be more confident in the work they produce.

Case Study

In this organisation's case each of their scrum teams are cross-functional, made up of Business Analysts, Developers and Testers. Each member of the team, especially the Developers are expected to participate in code reviews. Once an Issue (User Story, Bug or Task) is deemed ready by a developer, it is passed on to a peer for code review. If the review is approved, it is passed onward for testing. However, if the code review raises questions or there is a glaring issue, the code review is failed and the issue reassigned to the developer. Likewise, if testing finds a bug, the issue is also reassigned to the team and marked In Progress.

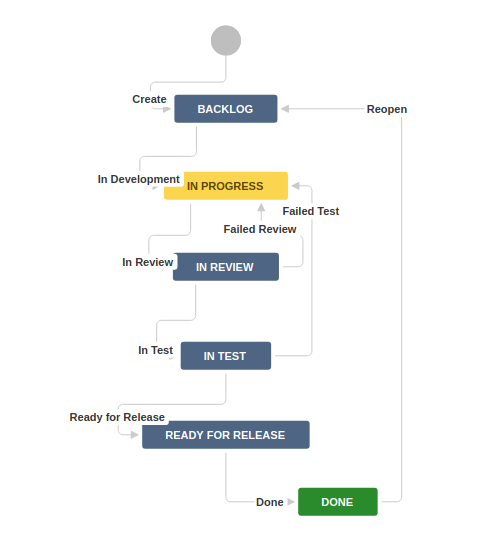

The workflow for a story or tasks for the team is shown below:

Having specific transitions from In Review → In Progress, as well as from In Test → In Progress allows us to identify when a issue has failed Code Review or Testing. The transition also allows the team to be notified of when these steps have failed. For the Scrum Master, these transitions also provide the input they need to report on problem areas.

How to report on "Failed" transitions

At GLiNTECH we suggest using Jira Misc Custom Fields.

This tool provides a transition count custom field type that increases in value every time a configured transition is called. In this instance we want to count how many times a User Story, Bug or Task fails Code Review or Testing.

The steps to do this are as follows:

Adding a Transition Count Custom Field

- Click on → and then select Issues

- Select the Custom Fields menu item on the left hand side of the screen

- Click on the Add Custom Field button

- Select the Advanced tab, and choose Transition Count Field

- Enter in the name of the custom field (in this case Code Review Failures) and select Create

Determining the ID of the Transition(s) to be Counted

To configure the custom field for counting transitions, the ID of the transition to be counted must be identified.

- Click on → Issues

- Select the Workflows menu item on the left hand side of the screen

- Select View for the Workflow that contains the transition you want to count

- Ensure that you are viewing the Workflow in Text mode. Next to each transition is the ID of the transition in brackets (highlighted in red in the image below)

5. Save the ID of the transition to be counted. This will be entered in below when configuring the custom field

Configuring the Custom Field

Now that the Code Review Failures custom field has been created, it needs to be configured to count the correct transitions.

- Select , next to the Code Review Failures custom field, and click Configure

- Select Edit Transition(s) to look for, to select the transition or transitions to be counted by the custom field

- Enter in the Transition(s) id (from Step 5 above) and the name of the Workflow. Click Save.

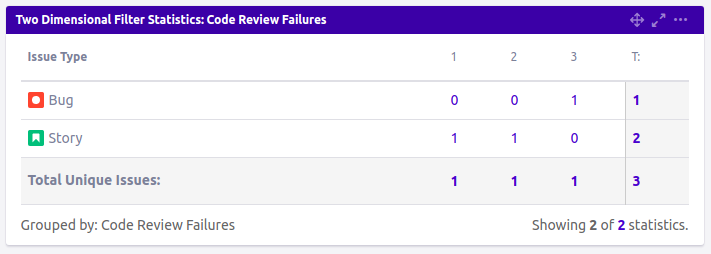

Reporting on Transition Counts

Reporting on transition counts is as simple as setting up a filter that pulls all issues where the Code Review Failures field is not empty, then using a Two Dimensional Filter Statistics to show this filter.

And there you have it, by using Jira Misc Custom Field, you and your teams can report on code review and testing failures. This important metric can be used to identify areas of improvement for developers, schedule a potential code-refactor or allow your teams to estimate appropriately for future changes in that area.